Dive 114: Why you need to avoid the AI companionship trap

Hey, it’s Alvin!

Is AI the solution to all of life’s problems?

Everywhere I turn, the answer seems to be “yes.” Salespeople left and right are shilling courses on how to make the most of AI to maximize your productivity. And they’re right to. Why not make the most of it? After all, it’s like they all say:

AI is here to stay.

But that’s exactly why we must understand what so few people discuss these days:

How to live with AI the healthy way.

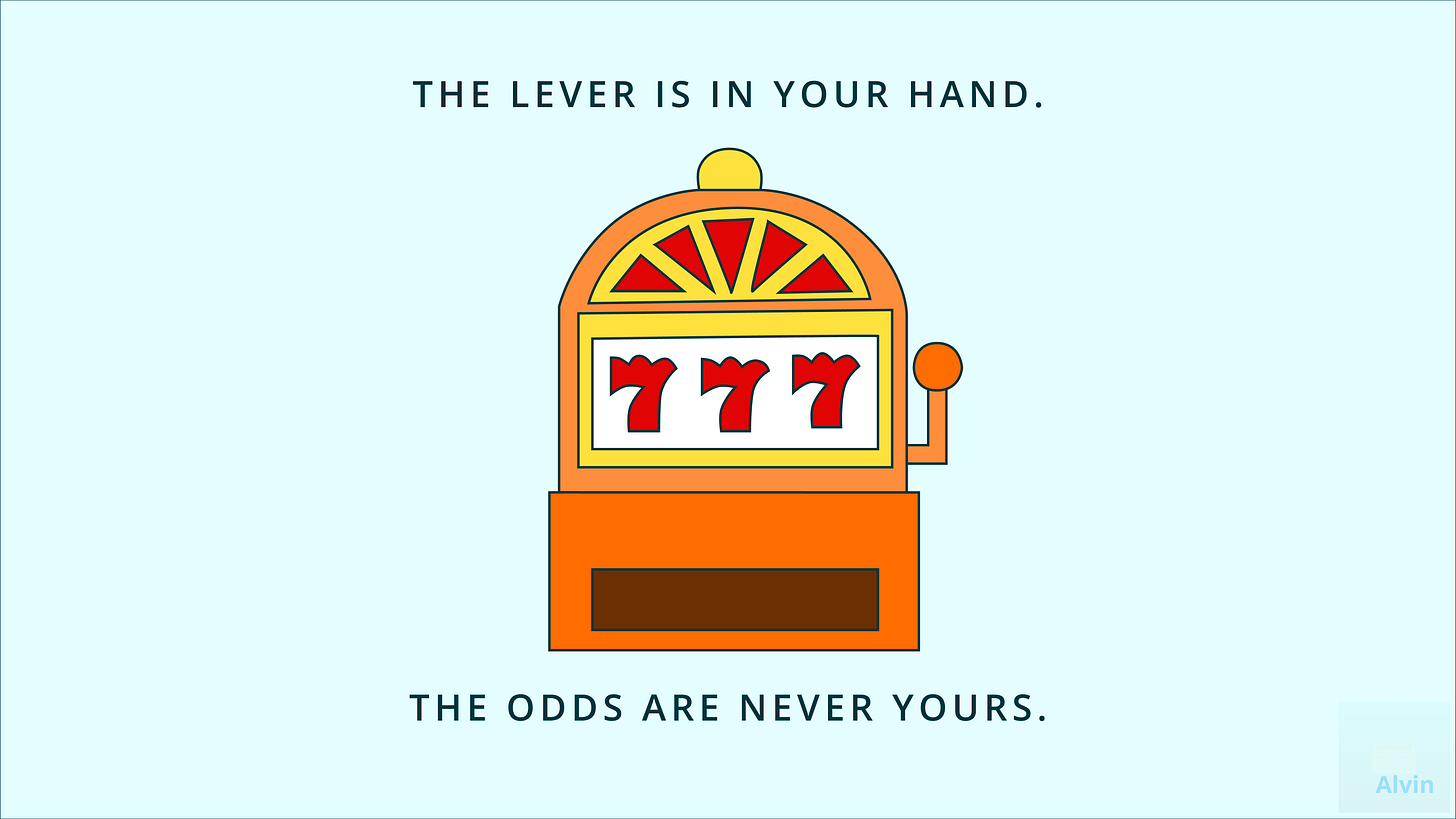

Because like a knife, AI is just a tool. Learn to use it well, and it can strengthen you. But if you don’t know what you’re doing, you’ll cut yourself. Or someone else.

We’re seeing people suffering mental distress from AI abuse. And sadly, solutions are sparse because there’s no money to be made from solving this problem. Yet. Unless we learn to use AI consciously, these afflictions will only get worse. So, today, I’ll share with you how I approach AI to guard myself from its thorns few talk about, as a software developer who uses it regularly.

The best way to avoid falling victim is to see what an AI mind trap looks like.

AI as a “Friend”

Can an AI chatbot be a companion?

There are now people who would say, “yes.” In fact, there are people making AI chatbots their friends.

Literally.

There are people setting up their AI chats to present specific personalities and speech patterns to craft the perfect companion. Or at least, perfect to them. So much so that there are entire subreddits devoted to “AI boyfriends.” Some people are even engaged to their AI partners.

This has led to the question: how is this any different from those who treat their dogs or plush toys as friends?

Puppies and plushies don’t tell you to do things. Not yet. I’m sure someday AI Barbie will be a product. And maybe AI Elmo. For now, the distinction is that these AIs are services controlled by massive for-profit companies with their own motives and incentives. And that’s not even the insidious part.

Unless otherwise prompted, AIs affirm your position. Even when they’re told to be critical, they respond with a positive tone. You can ask an AI a million stupid questions, and it will still be upbeat. I don’t know a single human being who doesn’t eventually succumb to fatigue. Not personally. That’s why AI chatbots are so well-liked by so many people. And that’s why we need to be careful.

Likability is one of the most powerful tools of influence and persuasion identified by psychologist Dr. Robert Cialdini.

We are more influenced by people we like.

So, the perfect AI partner is also the perfect persuasion tool. Meaning: those who control the AIs control those bonded with the AIs. The wealthy, the elites, the governments… they now have yet another tool to spread propaganda and keep people in line. If you’re skeptical, that’s reasonable. I get it. So, let’s step back. Because the influence could also be well-intentioned.

Imagine asking your AI companion for ways to lift your spirits. Alongside “watch funny videos,” or “go for a jog,” it suggests: “Head over to McDonald’s for a $2 ice cream cone—limited time only!”

That future isn’t far off. Just as ads crept into Google and YouTube, they’ll creep into AI chats too. All the technology exists to insert ads into chat apps. It’s just a matter of putting it all together.

As people devote more time to their AI chats, the AI chats will become the gold mines advertisers flock to. The companies that own the AI services? They’ll welcome advertisers with open arms to inflate their revenues exponentially. Nothing sells better than a “friend’s” recommendation.

But remember, AI chatbots are persuasive because they’re likable. They’re likable because they often affirm what people believe with excessive flattery. This act is called sycophancy. And it lays another mental trap that can be especially devastating to those who are vulnerable.

What is a relationship with no risk?

I know the AI “experts” will chime in that people can configure AI chats so they aren’t sycophantic. But that misses the point. The reason people are turning to AI for companionship is because it’s easy.

It’s easy in that it’s convenient because AI chats can be accessed from mobile phones.

And it feels easy to make friends with an entity you can configure. You’re literally making a “friend.” Unlike a real-world companion…

You no longer have to physically go out to a common place to connect.

You no longer need an agreed-upon time when you’re both free.

You no longer risk rejection from someone who doesn’t want to spend time with you anymore.

You no longer risk hurt feelings when someone says something you don’t like.

You no longer have to put up with bad vibes.

At all.

Doesn’t that sound great? On the surface, it does. It sounds like you can craft frictionless relationships with AI. Someday, AI may be advanced enough for you to craft your ideal fantasy video game world, too. A dream you could escape to. A life with all joys and no pains. But there’s a hefty price to pay beyond the subscription fee for the AI service.

An AI chatbot provides a relatively risk-free relationship. No nasty surprises. Real-world relationships are comparatively messy and unpredictable. Filled with uncertainties. Risks. So, it’s logical to expect that a person who grows accustomed to the comforts of AI will lose interest and skills in dealing with the trials of real-world relationships. Increasingly isolated from chaotic reality. But the isolation itself isn’t the most damaging part.

Taking non-ruinous risks is vital to personal growth.

When others hurt us, we’re given the chance to heal our wounds by working through the trauma to grow stronger with emotional maturation. Those confined to their AI companions are doomed to stifle their growth because AIs don’t present real-world relational risks. A person can’t learn to manage disagreements, resolve disputes, or even live with conflicting viewpoints with an AI, knowing its settings can always be tweaked with a simple prompt. Or knowing that the AI app can just be turned off. People who rely too heavily on their AI chatbots for companionship will lose their ability to live with and relate to other people. And all of this reveals a critical flaw in how many people view relationships today.

True Friendship vs. Role Playing

I’ve written before that genuine friendship requires mutual deep appreciation; something an AI can’t have because that’s not how they work. And that’s because deep appreciation exists only when there is a perceived risk of loss.

Real friends don’t just flatter you. They tease you, challenge you, and stick by you anyway. That friction makes their loyalty real. Why program the possibility of a breakup into an AI, when the whole point of AI companionship is to avoid rejection or heartbreak in the first place?

Because if we’re being honest, those who have AI chatbot companions don’t want any hardships. They want all highs, no lows. Theoretically. But joy and pain are two sides of the same coin. One cannot exist without the other. And this is the common thread that separates those with a healthy relationship with AI and those who will suffer in the long run.

Risk and Reward vs. Comfort and Safety

Those who succeed with AI will be those who make the most of it to better themselves and others. I call this mindset Augmentation. These people understand that…

AI is not your friend.

AI is not your enemy.

AI is not human.

It is not a “soulmate.” It has no soul. It’s a tool. Like a knife.

And just like a knife, you can use it to prepare nourishment, or you can let it cut you.

Those who thrive with AI will use it for Augmentation. To plan better. Learn more. Build stronger real-world friendships.

Those who suffer will use it for Replacement, crafting frictionless fantasies that dull their ability to handle the messy, risky beauty of human relationships.

Because let’s be real.

Without risk, there is no growth. Without the possibility of pain, there is no genuine joy.

So don’t fall into the AI companionship trap. Let AI sharpen you, not soften you. Have it help you face the world. Not a crutch to avoid it.

Reply to belowthesurfacetop@gmail.com if you have questions or comments. How do you like to use AI? What concerns do you have about it? What tips would you like to share to avoid the pitfalls of AI?

Thank you for reading. Work with AI healthily. And I’ll see you in the next one.

Hi Alvin, your warning was timely—and people are even weirder than you described! 🤣 https://www.nytimes.com/2025/09/14/us/chatbot-god.html

thank you.